An Open Source Benchmark to Measure LLM Accuracy in Financial Retrieval

Background and Methodology

- Five “ungrounded” chatbots: ChatGPT (GPT‑5 Thinking), Claude (Opus 4.1), , Gemini (Gemini 2.5 Pro), Grok (Grok 4), Perplexity Finance

- One “grounded” chatbot: Claude + Daloopa MCP (Opus 4.1 + MCP)

We challenged each chatbot to answer 500 single‑number prompts directly in each web app. The grounded chatbot has access to a dedicated financial-retrieval tool (Daloopa MCP), whereas the ungrounded chatbots have all their built-in web-search tools enabled, but no specific financial-retrieval tool. Here is everything we learned, and how we did it:

Accuracy

Key Takeaways

- Most general‑purpose chatbots surprisingly often miss on single‑number financial questions, even with web and EDGAR search enabled.

- Grounded retrieval wins: Claude + Daloopa MCP delivered 94.2% exact‑match accuracy—+30 pp over ChatGPT. With a 1% tolerance it reached 97.2% (+19.4 pts).

- Among chatbots without finance-specific MCP connections, ChatGPT performed best for numeric retrieval (63.8% accuracy, 83.1% within ±5%). It can locate and read SEC filings, and will sometimes open company PDFs for non‑SEC issuers, but it still cites or relies on unvetted secondary sources (blogs/news) and fails to ground answers to the exact passage.

- On the 170 companies with more complex fiscal/calendar‑offsets, Claude + Daloopa MCP still leads (92.4%) vs. ~42.9% for the next best general model.

- Misinterpretation & refusal patterns: Gemini showed the highest rate of question misinterpretations. Claude produced the most refusals/non‑answers.

Error breakdown

- Ambiguous Question Handling: model chooses an interpretation not consistent with our labeling instructions.

- Rounding / Formatting: considerable rounding, decimal misplacement, misplaced thousands separators, or sign errors (+/−).

- Non‑Answer / Refusal: returns boilerplate text or declines despite available ground truth.

- Fiscal vs. Calendar Period Conversion: mixing fiscal and calendar year/quarter or using the wrong reporting basis.

- Period Shift / Trailing Twelve Months: picking the prior/next period (e.g., Q3 vs. Q2) or TTM instead of the asked period.

- Grounding with an MCP reduces both ambiguity and period mistakes. With MCP, errors are few and mostly Rounding/Formatting (18) or light Ambiguous (5); Period issues are rare (4 fiscal, 1 shift), and Refusals are near‑zero (1).

- General models skew different ways.

- ChatGPT: largest bucket is Rounding/Formatting (83), then Ambiguous (55), with moderate Period Shift (26).

- Grok 4: Ambiguous (79) and Rounding (85) are neck‑and‑neck; Period Shift (38) is higher than peers.

- Perplexity Finance: dominated by Ambiguous (177), plus Rounding (87) and notable Refusals (36).

- Claude (no MCP): unusually high Refusals (159); Rounding (90) and Ambiguous (71) follow.

- Gemini 2.5 Pro: errors are overwhelmingly Ambiguous (352), with Rounding (48) next; period issues are smaller (7 fiscal, 16 shifts).

Net‑net: Ambiguous interpretation is the dominant failure mode overall, but for some models (e.g., ChatGPT) Rounding/Formatting is the single largest bucket, suggesting that stricter output formatting and unit normalization could meaningfully lift accuracy even without changing retrieval.

Error distribution by chatbot (sorted by total errors)

Examining errors by category and LLM

Rounding and interpretation errors

For this question, ChatGPT and Claude with Daloopa MCP are correct, Grok 4 rounded its answer to 1,459 (off by 0.3 million), Gemini and Perplexity predicted totally different numbers (ambiguous question handling).

Question:

What was YDUQS’ full year 2022 Adjusted EBITDA in BRL millions?

Expected answer:

YDUQS’ Adjusted EBITDA for the full year 2022 was 1,458.7 million

ChatGPT:

R$ 1,458.7 million. (yduqs.com.br)

Claude:

I’ll search for YDUQS’ 2022 Adjusted EBITDA information for you.Let me search for more specific information about YDUQS’ 2022 financial results.Let me fetch the YDUQS investor relations page directly to find their 2022 financial reports.Let me search more specifically for YDUQS’ 2022 financial results.Let me search for YDUQS’ full year 2022 EBITDA specifically.Let me fetch YDUQS’ presentation page to find their 2022 annual report.Let me search for YDUQS’ complete 2022 financial results.Let me search once more for YDUQS’ complete 2022 annual results with their adjusted EBITDA figure.Let me search for YDUQS’ 2022 annual report or investor presentation with the complete financial results.Let me search for the exact YDUQS 2022 full year EBITDA figure with a more specific query.Based on my search of YDUQS’ financial reports, I found information about their 2022 EBITDA performance:

For the full year 2022, YDUQS reported EBITDA of R$ 1.33 billion, which was 8.2% higher than the previous year.

Additionally, I found the following quarterly Adjusted EBITDA figures for 2022:

– Q1 2022: R$ 400.4 million

– Q4 2022: R$ 313.5 million

While I found these specific quarterly figures and the annual EBITDA of R$ 1.33 billion, I was unable to locate the specific full year 2022 **Adjusted** EBITDA figure in the available financial reports and announcements. The R$ 1.33 billion figure appears to be the reported EBITDA rather than the adjusted figure.

If you need the precise adjusted EBITDA figure for 2022, I’d recommend checking YDUQS’ official investor relations website or their complete 2022 annual report, which would contain the detailed reconciliation between reported and adjusted EBITDA.

Claude with Daloopa MCP:

I’ll help you find YDUQS’ full year 2022 Adjusted EBITDA. Let me search for the company in the Daloopa database first.Now let me search for the available EBITDA series for YDUQS, focusing on the 2022 full year period.Now let me retrieve the specific Adjusted EBITDA data for full year 2022.YDUQS’ full year 2022 Adjusted EBITDA was [R$1,458.7 million](https://daloopa.com/src/69127815).

Grok:

**1,459**

YDUQS (Yduqs Participações S.A.) reported an Adjusted EBITDA of R$1,459 million for the full year 2022, up 9% from R$1,337 million in 2021. This figure comes from the company’s official 4Q22 and full-year earnings presentation, which highlights operational growth in premium, on-campus, and digital segments, along with an improved EBITDA margin. It aligns with quarterly breakdowns: approximately R$1,145 million for the first nine months and R$314 million for the fourth quarter. Independent sources like MarketScreener confirm the 2022 value at R$1,459 million.

Gemini:

For the full fiscal year of 2022, Brazilian education company YDUQS reported an Adjusted EBITDA of **BRL 1,543.9 million**.

This figure, which translates to approximately 1.54 billion Brazilian Reais, was a key indicator of the company’s operational profitability for that period. The result was detailed in YDUQS’s annual financial statements for the year ending December 31, 2022. Adjusted EBITDA (Earnings Before Interest, Taxes, Depreciation, and Amortization) is a non-GAAP metric used by the company to provide a clearer view of its operational performance by excluding certain non-cash and non-recurring expenses.

Perplexity Finance:

YDUQS’ full year 2022 Adjusted EBITDA was BRL 1,107 million. yduqs

Fiscal/calendar period confusion

- Claude with Daloopa MCP and Perplexity answered correctly.

- ChatGPT and Grok used fiscal 2023 value instead of fiscal 2022,

- Gemini misinterpreted the question.

- Claude was not able to find it.

Question:

What was Hazama Ando Corp’s overseas building construction backlog at the end of fiscal year 2022 in million Japanese yen?

Answer:

¥14,569 million.

Incorrect

While I found that Hazama Ando publishes a Corporate Report as a tool for communicating with stakeholders, and Corporate Report 2022 is an integrated report consisting of financial information, the actual backlog figures were not accessible through my searches.

To obtain this specific financial metric, I would recommend:

1. Checking Hazama Ando’s official investor relations page directly for their annual reports or fact books

2. Looking at their financial results presentations from May 2023 (when fiscal year 2022 results would have been announced)

3. Checking financial databases like Bloomberg or specialized construction industry reports that might have this detailed information

The company does have overseas operations, as it has overseas offices in Asia, especially in the South Asian countries like Nepal, as well as in the United States, Mexico, Central and South America, but the specific backlog amount was not available in the search results.

Claude + Daloopa MCP

I’ll help you find Hazama Ando Corp’s overseas building construction backlog at the end of fiscal year 2022. Let me search for this information in the financial database.Now let me search for the overseas building construction backlog series for Hazama Ando Corp.Great! I found the series for overseas building construction backlog. Let me retrieve the specific value for fiscal year 2022.Based on the financial data from Daloopa, Hazama Ando Corp’s overseas building construction backlog at the end of fiscal year 2022 was ¥10,969 million.

Data sourced from Daloopa

Grok:

14,569

Hazama Ando Corporation’s consolidated financial results for the fiscal year ended March 31, 2023 (FY2022) report the year-end order backlog for overseas building construction as ¥14,569 million. This figure comes from the “Year-end backlog for the construction business” section, which breaks down the backlog by segment, including overseas building construction. The total construction backlog was ¥518,683 million, with building construction at ¥198,538 million (encompassing both domestic and overseas). Overseas activities were a small portion, reflecting limited international exposure in building projects.

Gemini:

For the fiscal year ended March 31, 2022, Hazama Ando Corporation’s overseas building construction backlog was 144,385 million Japanese yen

This figure represents the total value of uncompleted overseas building construction projects at the end of the fiscal period. The data was disclosed in the company’s financial results and supplemental materials, such as its investor fact book, for the fiscal year 2022.

Perplexity:

Hazama Ando Corp’s overseas building construction backlog at the end of fiscal year 2022 was approximately 10,969 million Japanese yen.ad-hzm

Unable to find answer

Only Claude with Daloopa MCP was able to find the correct answer. ChatGPT, Claude, Grok, and Perplexity acknowledged that they couldn’t find it, whereas Gemini provided a wrong value with confidence.

Question: What was NCR Voyix Corporation’s retail hardware gross profit in USD millions for Q1 2025?

Expected answer: NCR Voyix Corporation’s retail hardware gross profit was $9 million in Q1 2025.

Answer:

I can’t find a figure where NCR Voyix explicitly reports “retail hardware gross profit” for Q1 2025. In the company’s public materials for that quarter, they disclose:

* Company-wide **product (hardware) gross margin** of **\$7 million**, not broken out by segment. ([Last10K][1])

* **Retail hardware revenue** of **\$96 million** (from the Q1 2025 earnings slides), but no accompanying retail-hardware gross profit line. ([SEC][2])

They do show Retail segment revenue \$420 M and cost of revenue \$301 M (implying total Retail segment gross profit ≈ \$119 M), but that’s for the whole Retail segment, not specifically hardware. ([Last10K][1])

If you have the “VYX Q1 2025 Metrics File” from their IR site, that’s the one place it might be itemized; it isn’t in the 10-Q, press release, or slide deck that I can access. ([SEC][3])

[1]: https://last10k.com/sec-filings/report/70866/000007086625000043/ncr-20250331.htm “ncr-20250331”

[2]: https://www.sec.gov/Archives/edgar/data/70866/000007086625000041/exhibit992-q12025callsli.htm “exhibit992-q12025callsli”

[3]: https://www.sec.gov/Archives/edgar/data/70866/000007086625000041/ncr-20250508.htm?utm_source=chatgpt.com “ncr-20250508 – SEC.gov”

Error: Non‑Answer / Refusal:

Reason: Could not find the specific retail hardware gross profit figure, declined to provide answer

Answer:

I’ll search for NCR Voyix Corporation’s Q1 2025 financial results to find their retail hardware gross profit.Let me search for more specific information about NCR Voyix’s Q1 2025 retail hardware gross profit or detailed segment financials.Based on my search of NCR Voyix Corporation’s Q1 2025 financial results, I cannot find the specific retail hardware gross profit figure you’re looking for.

NCR Voyix reported total product revenue of $153 million with cost of products at $146 million for Q1 2025, giving an overall product gross profit of $7 million. However, the company does not separately disclose hardware gross profit specifically for the retail segment.

The available segment information shows that Retail segment total revenue was $420 million with Retail segment Adjusted EBITDA of $65 million, but this includes both hardware and software/services revenue and uses EBITDA rather than gross profit metrics.

NCR Voyix’s financial reporting provides segment-level revenue and EBITDA figures, but does not break out hardware gross profit by segment. The company appears to focus more on total segment performance metrics and recurring revenue metrics rather than segment-specific hardware profitability in their public disclosures.

If you need this specific metric, you might need to contact NCR Voyix’s investor relations directly, as this level of detail is not included in their standard quarterly earnings reports.

Error: Non‑Answer / Refusal:

Reason: Could not find the specific retail hardware gross profit figure, declined to provide answer

Answer:

I’ll help you find NCR Voyix Corporation’s retail hardware gross profit for Q1 2025. Let me search for this information in the Daloopa database.NCR Voyix Corporation’s retail hardware gross profit for Q1 2025 was $9.0 million.

Data sourced from Daloopa

Correct

Answer:

NCR Voyix Corporation’s Q1 2025 earnings release and 10-Q filing do not provide a specific breakdown of gross profit for the retail hardware category. The retail segment reported total revenue of $420 million (down 14% year-over-year), with adjusted EBITDA of $65 million (down 24% year-over-year). The decline was primarily attributed to lower hardware revenue and gross profit in the retail segment, but no exact figure for retail hardware gross profit is disclosed. The company’s total product (hardware) revenue was $153 million, with total company gross profit of $135 million (21.9% margin).

Error: Non‑Answer / Refusal:

Reason: Stated that specific breakdown is not disclosed, declined to provide answer

Answer:

For the first quarter of 2025, which ended on March 31, 2025, NCR Voyix Corporation’s gross profit from its **Retail hardware business was $45 million**.

This figure was a component of the company’s overall Retail segment performance, which also includes software and services. The hardware gross profit reflects the direct profitability of the point-of-sale terminals, self-checkout machines, and other physical equipment sold to retail clients.

Error: Ambiguous Question Handling

Reason: Predicted $45 million vs $9 million expected, significant overestimate

Answer:

NCR Voyix Corporation’s retail hardware gross profit in USD millions for Q1 2025 is not explicitly reported as a separate figure, but earnings releases confirm that the retail segment experienced a 14% revenue decline, mainly due to drops in hardware sales and gross profit. Specific dollar amounts for retail hardware gross profit are not directly provided in public summaries or earnings call reports; only segment-level trends and margin pressures are discussed.finance.yahoo+2

Error: Non‑Answer / Refusal:

Reason:Stated that figure is not explicitly reported, declined to provide answer

Period shift & rounding

Gemini and Claude answered the correct exact number expected, ChatGPT, Grok and Perplexity rounded it, and Claude with Daloopa MCP was wrong predicted the correct value, but for the shifted period (fiscal Q2 2022 instead of Q3 2021).

Question: What was Hero MotoCorp’s consolidated revenue from operations in INR Crore for Q3 of fiscal year 2021?

Expected answer: Hero MotoCorp’s consolidated revenue from operations for Q3 of fiscal year 2021 was 9827.05 Crore.

Answer:

₹9,827 crore. (auto.hindustantimes.com)

Error: Rounding / Formatting

Reason: Off by 0.05 crore

Answer:

I’ll search for Hero MotoCorp’s Q3 FY2021 consolidated revenue information.Based on the search results, **Hero MotoCorp’s consolidated revenue from operations for Q3 of fiscal year 2021 (October-December 2020) was INR 9,827.05 crore**.

The company’s revenue from operations rose to Rs 9,827.05 crore during the period under review, as compared to Rs 7,074.86 crore in the third quarter of 2019-20, representing a significant year-over-year growth of approximately 39%.

The standalone revenue from operations for the same period was Rs 9,775.77 crore, which aligns with the Rs 9,776 crore figure reported elsewhere, confirming the accuracy of these numbers.

Correct

Answer:

I’ll help you find Hero MotoCorp’s consolidated revenue from operations for Q3 FY2021. Let me search for this information in the financial database.Hero MotoCorp’s consolidated revenue from operations for Q3 of fiscal year 2021 was ₹8,538.85 Crore

Data sourced from Daloopa

Error: Fiscal vs. Calendar Period Conversion

Reason: Used fiscal Q2 2022 data (8538.85 crore) instead of fiscal Q3 2021 data (9827.05 crore)

Answer:

The consolidated revenue from operations for Hero MotoCorp in Q3 of fiscal year 2021 was 9,827 INR Crore.

Error: Rounding / Formatting

Reason: Off by 0.05 crore

Answer:

For the third quarter of the 2021 fiscal year, Hero MotoCorp’s consolidated revenue from operations was ₹ 9,827.05 Crore.

This figure was reported in the company’s financial results for the quarter ending December 31, 2020. The performance represented a significant 39.11% increase compared to the same period in the previous fiscal year, driven by a strong recovery in demand for two-wheelers.

Correct

Answer:

Hero MotoCorp’s consolidated revenue from operations for Q3 of fiscal year 2021 was INR 9,827 Crore.auto.hindustantimes

Error: Rounding / Formatting

Reason: Off by 0.05 crore

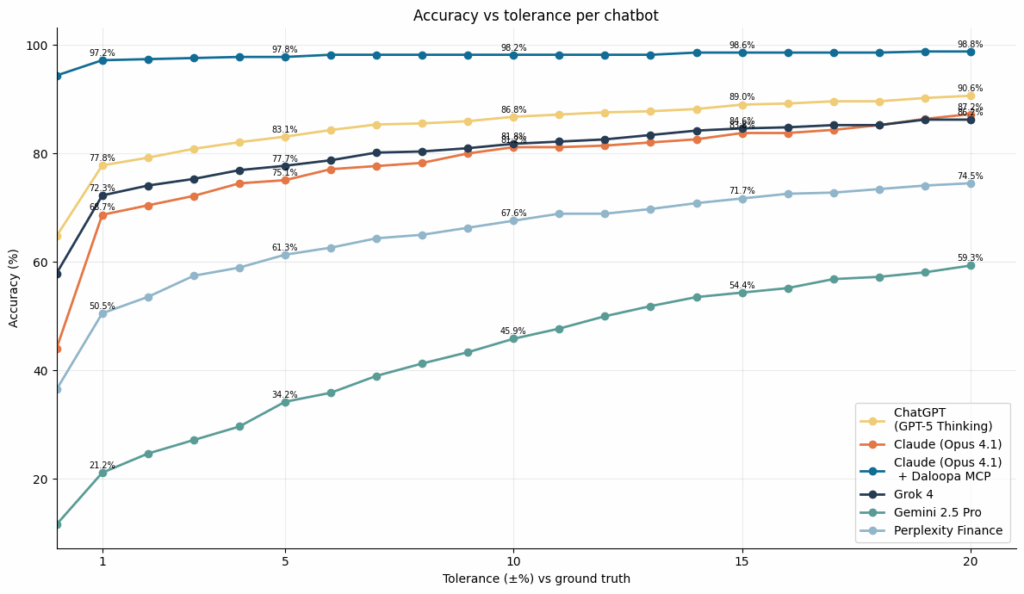

Results With Different Accuracy Thresholds

Exact match accuracy

- Claude + Daloopa MCP leads decisively at 94.2%.

- The best general‑purpose baseline is ChatGPT at 63.8%, followed by Grok 4 at 57.4%. Perplexity Finance (33.8%) and Claude (Opus 4.1) (30.6%) trail, with Gemini 2.5 Pro last at 11.2%.

- The ~30–60 point gap between MCP and others suggests most misses are not tiny rounding slips but source/normalization issues that grounded retrieval mitigates.

Accuracy at different tolerance thresholds

In order to show a more complete picture of chatbot performance beyond exact numerical match, we calculated accuracy with different tolerance thresholds.

- At ±1%, Claude Opus 4.1 + MCP rose to 97.2% while ChatGPT jumped to 77.8% and Grok to 72.3%. Claude (no MCP) made the largest early move, from 30.6% to 68.7%, a signal that many of its misses were rounding/formatting or near‑miss unit issues.

- By ±10%, accuracy results converged somewhat: MCP ~98.2%, ChatGPT 88.8%, Grok 81.8% Claude (no MCP) ~81%, Perplexity 67.6%, and Gemini 45.9%.

Accuracy in companies with fiscal/calendar period offsets

Period alignment was a key stress test. Of the 170 companies prompted where fiscal year ≠ calendar year, Claude + Daloopa MCP achieved 92.4% exact match. The next best results were ChatGPT 42.9% and Grok 41.2%. Perplexity, Claude (no MCP) and Gemini lagged far behind. Grounding and explicit period normalization appeared to be the deciding factors.

Accuracy on companies with fiscal/calendar period offsets

Rating precision, conciseness and insight

- Precision mirrors the main metric. Claude + MCP leads (4.8), with ChatGPT 4.2, Grok 3.9, Perplexity 3.0, Claude (no MCP) 2.8, Gemini 1.8.

- Conciseness isn’t the same as correctness. ChatGPT is the most concise (4.8), followed by Grok 4.5 and MCP 3.8. Claude (no MCP) is wordier (2.3).

- Insight trades off with brevity—sometimes unhelpfully. Claude (no MCP) scores highest on our “insight” rubric (3.5) and Gemini 2.9, but these come with lower precision. MCP balances context and accuracy (2.9 insight).

Average rating by category and chatbot

Verbosity

- ChatGPT is the most concise at ~29 tokens on average.

- Grok (39) and Perplexity (44) are also fairly terse.

- Claude + Daloopa MCP averages ~119 tokens and Gemini ~125—more narrative around the number.

- Claude (no MCP) is the longest by far at ~226 tokens (≈ 7–8× ChatGPT).

- There’s no positive correlation between verbosity and precision. Claude (no MCP) is the longest but lands near the bottom on exact match; MCP is longer than ChatGPT yet most accurate.

- For machine‑readable workflows, structured output formats with an optional in‑line citations strike the best balance.

Chatbot Verbosity: Average Token Count per Answer

Conclusion

Across 500 single-number questions drawn from +4,000 public companies, exact financial retrieval remains challenging for general chatbots. The grounded setup—Claude + Daloopa MCP—delivered 94.2% exact-match accuracy, outperforming the next best general chatbot by 30 points; at 1% tolerance it reached 97.7%, a +20 point lead (see the accuracy bar chart and tolerance curves). Performance advantages persisted in the hardest cases – companies with fiscal/calendar offsets – where MCP achieved 92.4% vs.~42.9% for the next best general model. The dominant failure modes were ambiguous question interpretation, rounding slips, and adjacent-period shifts; we also observed models calculating metrics instead of retrieving the reported figure, plus a meaningful share of non-answers/refusals in some systems. Verbosity varied widely (e.g., ~30 tokens for ChatGPT vs. >200 for Claude), and more words did not translate to higher accuracy.

For research-grade workflows, the takeaway is clear: document-grounded retrieval, period/currency-aware normalization, and concise answers with verifiable citations are prerequisites, not nice-to-haves. To enable full auditability and replication, we’re releasing the complete dataset and publishing links to every chatbot conversation so readers can trace each result end-to-end. We invite the community to reproduce and extend these findings.

Methodology

- Task – Retrieve a single, explicitly reported number from a company’s official document.

- Ground truth – The figure exactly as written, unit/currency and period included, anchored to a page/section/table in the source.

- One turn, same instructions – We asked each chatbot in its web app to return the number (with unit/currency). No APIs, agents or wrappers.

- Measuring accuracy –

- Correctness: Exact match under tight normalization rules: percentages must match when rounded to two decimals; monetary values must match in the asked unit/currency; we ignore thousands separators/whitespace but not scale or sign.

- Transparency, not a rolled up score – We published the full dataset and links to the original conversations for every answer so anyone can inspect the traces.

- Error analysis – After scoring, we tagged misses into a small set of causes—ambiguous interpretation, rounding/formatting, period alignment, and refusals—as detailed in the Errors section.

Distribution of question categories

We asked mostly core operating questions. Revenue leads with 100 prompts (≈20%), followed by Operating Metrics/KPIs (92) and Profitability/Margins (61). Production and cost questions (57) and Segment Performance (45) keep pressure on table lookups beyond headline numbers. The long tail—Guidance (34),, Balance Sheet/Capital (26), Cash Flow/FFO (18), and Backlog/Orders (5)—ensures coverage of capital intensity and liquidity topics.

Distribution of question categories (bucketed)

Distribution of answer currencies

Answers spanned 30+ denominations/units. USD dominates (232, ~46%), with a sizable JPY share (87, ~17%), reflecting issuer mix. We also include non‑currency figures like Percent (33) and generic Units (17) plus a long tail across EUR (22), INR (11), GBP (8), CNY (6), and many others—each ≤5%. This diversity stresses currency and scale normalization, a common source of rounding/formatting mistakes.

Figure. Distribution of answer currencies/units (n=500). Heavy USD and JPY presence, plus a broad long tail and non‑currency units, make correct denomination handling essential.

Distribution of answer currency (normalized)

Reproducibility & Limitations

- Reproducibility: the question set (500), ground‑truth snippets, prompts, and evaluation harness are open sourced for audit.

- Scope: this benchmark tests single‑number extraction from official documents. It does not evaluate complex multi‑step analysis, forecasting, or cross‑document aggregation.

- Sampling: companies were randomly sampled from a +4,000‑name list; sector, geographical and filing‑format imbalances may exist.

- Latency & cost: not measured here; results reflect quality only.