In finance, the right call at the right second makes money. The wrong one costs it.

Large language models have quickly gained attention as potential disruptors, handling prompt calculations and predictions once left only to seasoned experts. But can large language models analyze financial statements well and deliver reliable insights without adding new risks?

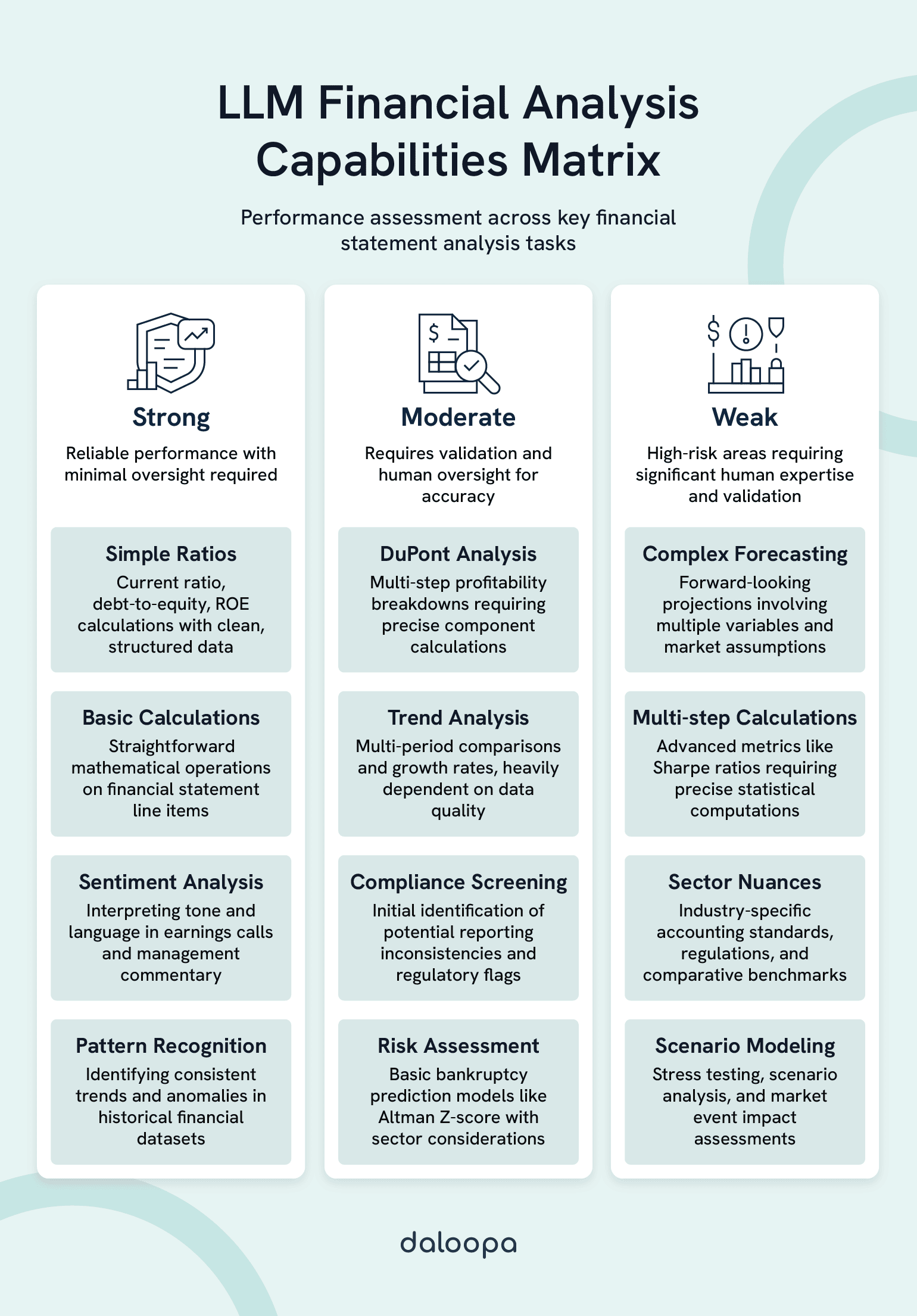

Early results are encouraging. LLMs perform well in areas like ratio computation and bankruptcy risk estimation, showing real promise as a time-saver and decision-support tool. That said, they still stumble with complex numerical data and forecasting. Tests with DuPont analysis and Z-score models highlight both their potential and their blind spots. For analysts, portfolio managers, and tech leads, knowing where large language models analyze financial statements successfully and where they still need human input, can make the difference between a smart edge and a risky bet.

Key Takeaways

- LLMs handle simple ratios well but struggle with complex calculations and predictive models.

- Fine-tuning (e.g., RAG, few-shot learning) boosts accuracy but can’t fully eliminate high-stakes risks.

- Human oversight, paired with hiqh-quality verifiable data from reliable sources like Daloopa, ensures compliance and prevents costly errors.

Current Capabilities: How Large Language Models Analyze Financial Statements

Modern LLMs can competently handle certain financial tasks, from calculating common ratios to assessing bankruptcy risks. However, successful implementation requires you to understand their capabilities, as well as limitations. In many LLMs in finance use cases, these models save time by automating repetitive analysis while freeing analysts to focus on judgment-intensive tasks.

With revolutionary technologies like Daloopa’s Model Context Protocol (MCP), these capabilities expand even further. It integrates structured, source-linked financial data directly into AI workflows, enabling faster model building, automated KPI extraction, and richer context for complex calculations, all without disrupting how analysts already work. This makes AI in financial statement analysis far more actionable and auditable.

Technical Foundations for Financial Analysis

LLMs interpret financial statements using transformer-based neural networks trained on large-scale datasets of textual and numerical content. Models like BloombergGPT and Claude for Financial Services employ attention mechanisms to detect relationships between line items and their broader context.

Core technical components include:

- Multi-modal processing: Combining numerical data from structured statements with narrative text in reports.

- Fine-tuning capabilities: Adapting to industry-specific terminology and calculation standards.

- Retrieval-augmented generation (RAG): Drawing on external financial databases for improved accuracy.

Through supervised learning, these systems learn to identify patterns connecting balance sheets, income statements, and cash flow reports. They can detect correlations overlooked by rigid rule-based approaches.

Ratio analysis, for example, demands the ability to extract the right figures from layered documents and apply formulas correctly to produce metrics like current ratios, ROE, or leverage ratios.

Natural language processing components further enable these models to interpret management commentary, footnotes, and transcripts, enriching the numerical picture with qualitative insight.

For instance, after reading a CEO’s letter mentioning “temporary production challenges,” the model can factor that into context when explaining a dip in quarterly margins.

Key Financial Analysis Tasks Performed by LLMs

Tests of AI in financial statement analysis often focus on three areas: ratio accuracy, DuPont decomposition, and Z-score-based bankruptcy prediction. Research on these capabilities shows varying results depending on task complexity and data quality.

Primary analysis functions:

| Task Category | Specific Applications | Accuracy Considerations |

| Ratio Calculation | Liquidity, profitability, efficiency metrics | Reliable when inputs are clean |

| Predictive Modeling | Altman Z-score, bankruptcy prediction | Results vary by sector and dataset |

| Trend Analysis | Multi-period comparisons, growth rates | Strongly dependent on source quality |

Forecasting is another area where large language models analyze financial statements. LLMs can attempt forward-looking projections by analyzing historical EBITDA, revenue trends, and market signals.

More advanced implementations also look for accounting anomalies, scanning for inconsistent ratios or unexpected account relationships.

Sentiment analysis layers add qualitative depth by interpreting tone and language in executive communications, which can influence forward-looking risk assessments.

Conversational interfaces such as ChatGPT allow analysts to adjust model assumptions interactively, testing scenarios on demand. Daloopa GPT takes these interactive capabilities a step further. It is accessible through OpenAI’s GPT directory and offers direct access to auditable financial data from thousands of public companies. An analyst could ask, “What happens to projected free cash flow if we assume a 10% drop in sales volume?” and get an instant error-free recalculation with each datapoint hyperlinked to the original source for full auditability.

Real-World Applications and LLMs in Finance Use Cases

Some banks and investment houses already use large language models to analyze financial statements for pre-screening public filings or automating parts of credit risk evaluations. These deployments aim to save time without replacing human scrutiny.

Enterprise implementation examples:

- Credit risk assessment: Automated screening of borrower statements for underwriting.

- Portfolio monitoring: Ongoing checks of holdings against target financial indicators.

- Regulatory compliance: Identifying possible reporting inconsistencies automatically.

Private equity teams are also exploring LLMs in finance use cases during due diligence to scan multi-year data for performance shifts, using the output to guide deeper manual review. The model could highlight a sudden spike in SG&A expenses in year three of a five-year dataset, prompting the team to investigate a possible management change.

Quantitative funds blend model results with traditional analyses to shortlist securities. In risk management, LLMs flag declining metrics early, prompting timely intervention.

Yet all such uses depend on robust validation processes. Data quality, training scope, and context influence reliability, and without careful oversight, errors may slip through.

In financial AI applications, data quality isn’t just important. It determines whether outputs are insightful or dangerously misleading. With Daloopa’s high-quality, fully auditable financial data, LLMs can operate on clean, consistent inputs, dramatically improving accuracy and reducing the risk of errors caused by incomplete or inconsistent datasets.

Limitations and Challenges When Large Language Models Analyze Financial Statements

Despite their promise, large language models analyzing financial statements face serious hurdles in both numerical processing and financial domain specialization. Their accuracy falters on complex metrics, and they cannot independently access key proprietary datasets.

One major barrier to adoption in finance is trust. Hallucination rates for unassisted LLMs can reach 50%, making analysts wary of using them for anything beyond low-stakes tasks. Daloopa MCP solves this by feeding vetted, hyperlinked data directly into the model, cutting hallucination rates to 0% and embedding seamlessly into existing analyst workflows.

Technical Constraints

- Mathematical processing limitations: These remain a primary obstacle. Multi-step calculations, like those behind certain profitability metrics, can produce arithmetic mistakes, undermining trust in results.

- Memory and context limits: They restrict their ability to process long-form financial statements in one pass. Beyond a certain length, models may overlook or misinterpret key data from earlier in the document.

- Training data gaps: Without exposure to uniform, standards-based financial data, models risk confusing GAAP and IFRS principles or misreading sector-specific terms.

- Real-time data integration: Without built-in live market feeds, outputs can lag behind current conditions, an issue for traders or risk teams needing immediate updates.

Domain-Specific Challenges

- Complex metric calculations: For instance, the Sharpe ratio demands precise handling of multiple statistical inputs, an area where many LLMs falter. A model might correctly pull historical returns but miscalculate standard deviation, skewing the entire risk-adjusted return figure.

- Lack of direct database access: They cannot query resources on their own, forcing reliance on user-provided data that may be incomplete.

- Sector nuances: From insurance reserve accounting to bank capital requirements, the nuances often lie outside the reach of generalist models unless heavily fine-tuned.

- Regulatory fluidity: Frequent updates to SEC or tax codes require continuous retraining to ensure compliant interpretations.

- Comparative analysis gaps: These emerge when models lack deep familiarity with industry norms. They might compare a utility company’s margins to a tech startup’s, and flag it as underperforming, without accounting for sector cost structures.

Ethical and Practical Concerns

- Accountability in decisions: When an AI-generated recommendation goes wrong, tracing the reasoning can be difficult, making responsibility unclear.

- Bias in predictions: This can seep in from historical training data, affecting lending fairness or skewing risk profiles.

- Opaque reasoning: It limits transparency, complicating audits or regulator reviews. Unlike interpretable models, LLMs rarely reveal how they reached a conclusion.

- Data protection: This is another concern, especially for cloud-based tools processing proprietary client data.

- Over-dependence: It raises risks if teams sideline human expertise during market events beyond the model’s training experience.

Mathematical errors, training data gaps, and regulatory complexities all pose risks. Still, as AI in financial statement analysis improves with structured, audited datasets, reliability is rising.

Future Directions and Integration With Human Expertise

Improving LLM performance for finance will depend on better computational precision, domain-specific training, and integrated workflows that pair AI’s processing speed with human judgment. For instance, analysts can let large language models analyze financial statements at scale, then verify anomalies before they influence investment decisions.

Emerging Enhancements in LLM Financial Capabilities

Specialized financial training offers the clearest path forward. Models fine-tuned on broad, high-quality financial datasets are already showing better accuracy in tasks like earnings forecasting and complex ratio work.

For example, a fine-tuned model might not only calculate free cash flow correctly but also flag a one-time capex event that distorts year-over-year comparisons.

Some experimental architectures blend financial knowledge graphs with text processing, strengthening outputs for asset pricing models and multi-step calculations. Think of a model pulling both historical earnings data and macroeconomic indicators to refine its valuation range for an industrial stock.

Real-time data integration could let models evaluate both historical reports and live market information, improving forecasts in volatile environments. For instance, an LLM could instantly adjust its risk rating for an airline after a sudden spike in oil prices.

| Enhancement Area | Current Capability | Target Improvement |

| Ratio Calculation | 75-85% accuracy | 95%+ accuracy |

| Earnings Forecasting | Limited context | Multi-year projection |

| Risk Assessment | Basic metrics | Complex scenario modeling |

Advances in multi-modal processing will help models read tables, charts, and visual data alongside narrative sections, reducing the manual work analysts still do. These tools can process unstructured data, summarize reports, and identify anomalies, making them powerful for LLMs in finance use cases such as ratio analysis, risk modeling, and compliance monitoring.

Human-AI Collaborative Frameworks

Hybrid decision-making setups let AI handle computation while analysts focus on interpretation, especially in asset pricing. For example, an LLM might run 10 different pricing models for a bond, while the analyst decides which assumptions best fit the market climate.

Verification steps at key points in the process ensure that humans validate AI outputs before any high-impact decision. Think of it as the AI doing the heavy lifting and the analyst signing off before it moves from spreadsheet to trading desk.

Explainable AI tools for finance are in development, giving analysts clearer views of why a model reached its conclusions, making it easier to justify decisions to regulators or clients.

Training initiatives now aim to prepare finance professionals for AI collaboration—spotting model errors, challenging outputs, and applying context.

Role specialization is forming in some firms, where junior analysts supervise AI runs and senior analysts deliver the strategic insight.

Regulatory and Industry Adoption Outlook

Compliance standards for AI use in finance are taking shape, with regulators likely to set thresholds for accuracy and documentation. This could mean mandatory audit trails showing exactly how the AI arrived at a given valuation or risk score.

Adoption patterns suggest starting with low-risk tasks, such as generating initial earnings projections, before moving to more sensitive analysis.

Risk safeguards, including human approval requirements for asset pricing or bankruptcy predictions, are becoming part of pilot deployments.

Firms are building proprietary models fine-tuned for their specific needs, from fraud detection to compliance monitoring. A bank, for instance, could train a model solely on its internal lending history to predict default risk more accurately.

Standardized methods for training and validation are being discussed to ensure that AI-generated financial analysis is consistent and auditable across the sector.

AI in Finance: Promising Partner, Not Autonomous Pilot

LLMs have moved past the experimental stage in financial analysis. They’re delivering measurable value on specific tasks, from ratio calculations to early risk detection. The role of AI in financial statement analysis will expand as models become more specialized, explainable, and integrated into enterprise workflows. But the evidence is clear that without human oversight, they can miscalculate, misinterpret, and miss the bigger picture.

The winning formula is simple: pair the model’s speed with correct information along with the analyst’s judgment. Let AI do the data grinding, then apply human experience to interpret, challenge, and finalize decisions. Daloopa MCP delivers accurate and fully verifiable fundamental data for LLMs, empowering analysts to trust AI in financial statement analysis while keeping full control over decision-making. Request a live demo to see how it works.